How to Avoid Unauthorized AI Model Training on Proprietary Data

Introduction

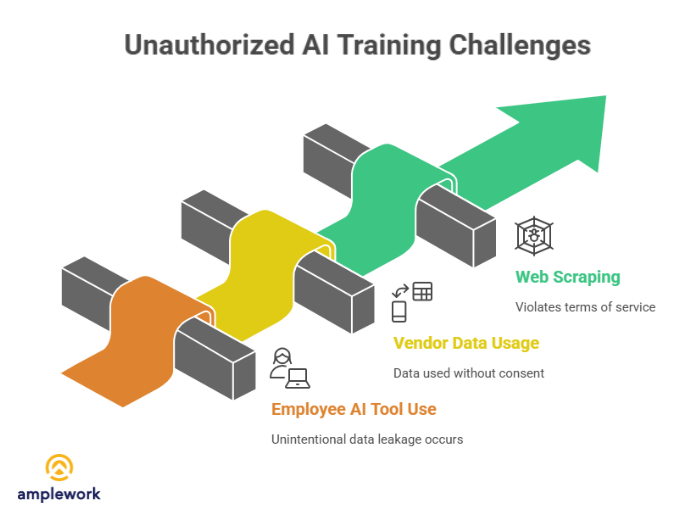

Companies increasingly find that proprietary data, customer information, code repositories, and trade secrets are used to train AI models without authorization. This occurs when employees share sensitive data in public AI tools, vendors use client data without consent, or scrapers harvest content. Such unauthorized training can expose competitive intelligence and customer insights. To prevent unauthorized AI training, organizations must implement technical controls, enforce policies, and manage vendors, ensuring valuable data stays protected in the AI era.

How Unauthorized AI Training Happens

Employee Use of Public AI Tools

Employees sometimes paste proprietary information into public AI tools like ChatGPT or Claude, seeking assistance. Such well-intentioned actions risk exposing sensitive code, customer data, or strategies, creating unintentional data leakage and privacy concerns.

Vendor and Partner Data Usage

Third-party vendors and AI service providers may use client data for model training unless contracts explicitly prevent it. Reviewing terms, restricting training permissions, and ensuring data protection clauses are critical for safeguarding sensitive information.

Web Scraping and Harvesting

AI development companies scrape publicly available websites, documentation, forums, and repositories to train models. Public content does not imply consent, and such harvesting often violates terms, capturing data organizations never intended for AI use.

Technical Controls to Prevent Unauthorized AI Training

Block Access to Public AI Services

Implementing network controls to block public AI services prevents employees from exposing sensitive data. Firewalls, DNS filtering, proxy restrictions, and VPN enforcement establish baseline protection against accidental information leakage.

Deploy Enterprise AI Solutions

Providing approved enterprise AI tools with proper data protection reduces the use of unauthorized AI services. Features like no training on customer data, access controls, audit logging, and monitoring ensure secure, productive workflows.

Implement Data Loss Prevention (DLP)

DLP systems detect and block sensitive information being transmitted to unauthorized destinations. By scanning traffic, files, and emails, they enforce policies, alert users, and maintain audit trails to prevent accidental or deliberate leaks.

Use robots.txt and API Rate Limiting

Configuring robots.txt, rate limiting, CAPTCHA, and IP filtering deters web scraping. While not foolproof, these measures reduce automated harvesting, slowing unauthorized AI training efforts and protecting publicly accessible content.

Watermark and Track Data Usage

Embedding invisible watermarks, unique data fingerprints, or canary tokens in documents allows organizations to detect if proprietary data appears in AI training datasets, providing accountability and evidence for legal or compliance actions.

Also Read : Types of AI Models Explained: Supervised, Unsupervised, Deep Learning & More

Policy and Legal Protections

Establish Clear AI Usage Policies

Create comprehensive policies defining acceptable AI tool usage, prohibiting unauthorized data sharing, and specifying approved enterprise solutions. Clear policies provide a foundation for enforcement and employee accountability.

Policy components include:

- Approved AI service lists

- Prohibited data types

- Usage scenario guidelines

- Violation consequences

- Reporting procedures

Well-communicated policies set expectations and establish organizational norms.

Negotiate Strong Vendor Contracts

Review and negotiate AI service contracts, ensuring explicit prohibitions on training with your data. Don’t accept default terms of service; negotiate addenda protecting proprietary information regardless of service provider defaults.

Contract provisions include:

- Explicit no-training clauses

- Data deletion requirements

- Subcontractor restrictions

- Audit rights and transparency

- Breach notification requirements

Strong contracts provide legal recourse when technical controls fail.

Implement Terms of Service Enforcement

Clearly state in your own terms of service that scraping, data harvesting, and AI training on your content violates terms and constitutes unauthorized use. This establishes a legal foundation for action against violators.

Terms provisions include:

- Scraping prohibition clauses

- AI training restriction language

- Enforce the right statements

- Damage claim provisions

- Jurisdiction specifications

Legal terms provide protection even for publicly accessible content.

Conduct Regular Compliance Audits

Audit employee AI tool usage, vendor contracts, and data protection practices regularly, ensuring ongoing compliance. Technology and usage patterns evolve, and static policies without enforcement lose effectiveness quickly.

Audit activities include:

- Network traffic analysis

- Employee usage reviews

- Vendor contract verification

- Policy compliance checking

- Incident investigation

Continuous monitoring catches violations before significant damage occurs.

Employee Training and Awareness

- Educate About Risks: Many employees don’t understand how AI services use input data. Training programs explaining risks and consequences improve compliance significantly.

- Provide Approved Alternatives: Give employees sanctioned AI tools meeting legitimate productivity needs, reducing temptation to use unauthorized services.

- Make Reporting Easy: Establish simple processes for reporting suspected violations or requesting guidance about specific AI usage scenarios.

- Recognize Good Practices: Acknowledge and reward employees following data protection policies, encouraging a cultural shift toward security consciousness.

Also Read : How to Train an AI Model for Local Business Sales: A Real-World Framework

The Amplework Data Protection Advantage

At Amplework Software, we help organizations implement comprehensive strategies to prevent unauthorized AI training on proprietary data. Our AI Security & Compliance services include technical controls, policy development, and vendor management, protecting your valuable information.

Our Data Protection Services:

- Enterprise AI solution deployment

- DLP system implementation

- AI usage policy development

- Vendor contract review and negotiation

- Employee training programs

Our AI consulting services help organizations balance AI productivity benefits with necessary data protection. We design solutions enabling employees to leverage AI capabilities safely without exposing proprietary information.

Final Words

To prevent unauthorized AI training on proprietary data requires layered defenses combining technical controls, policy enforcement, vendor management, and employee education. No single measure provides complete protection; comprehensive strategies addressing multiple exposure vectors deliver effective security.

The AI era demands new data protection approaches. Organizations that implement strong preventive measures maintain control over proprietary information, while those relying on outdated strategies face inevitable exposure.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228