Building a Scalable AI Integration Architecture: Frameworks, Patterns, and Best Practices

Organizations across industries are adopting AI to enhance decision-making, automate workflows, improve customer experiences, and unlock new revenue opportunities. In fact, over 80% of enterprises are already integrating AI into their business strategies, highlighting its growing role in driving digital transformation. However, deploying AI without a structured approach can lead to fragmented systems, inefficiencies, and missed opportunities.

A well-designed AI Integration Architecture ensures that AI components, such as machine learning models, data pipelines, and business applications, work together seamlessly. It provides a scalable, reliable, and high-performing foundation that supports enterprise growth and innovation.

By leveraging the right frameworks, architecture patterns, and best practices, businesses can build resilient AI systems that scale efficiently, adapt to change, and deliver measurable value.

What Is AI Integration Architecture?

AI Integration Architecture is a structured framework connecting AI components, including machine learning models, data pipelines, business applications, and cloud services, into a cohesive, scalable system. Proper architecture design ensures seamless communication between components, optimizes performance, and supports enterprise growth.

Key elements include:

- AI System Integration: Connecting AI modules with business applications.

- Modular AI Architecture: Breaking systems into manageable, scalable components.

- Data Pipeline Architecture for AI: Efficiently managing the flow of data from source to model deployment.

Frameworks for AI Integration

Selecting the right AI Integration Frameworks is critical to building scalable, resilient, and maintainable AI systems. Frameworks provide the foundation for system design, model deployment, workflow automation, and cloud integration.

1. TensorFlow Extended (TFX)

TFX is a robust framework for building production-ready AI pipelines. It supports data preprocessing, model training, validation, deployment, and monitoring, making it ideal for enterprise-scale AI solutions.

2. Kubeflow

Kubeflow enables MLOps automation on Kubernetes. It allows teams to deploy, scale, and manage machine learning workflows across multi-cloud environments efficiently.

3. Apache Airflow

Airflow is widely used for workflow orchestration and managing complex data pipelines. It enables scheduling, dependency management, and automated monitoring of AI workflows.

4. MLflow

MLflow is a framework that manages the end-to-end lifecycle of machine learning models, including experimentation, reproducibility, and deployment. It enhances the scalability of AI systems by streamlining model tracking and versioning.

5. BentoML

BentoML provides a framework for model serving and deployment, enabling AI models to be packaged and deployed as microservices. It simplifies API integration and ensures consistency across development and production environments.

6. Enterprise AI Platforms

Platforms like Databricks, AWS Sagemaker, and Azure Machine Learning provide end-to-end AI Integration Frameworks that support data ingestion, model training, deployment, monitoring, and collaboration across teams.

Key Benefits of Using AI Frameworks:

- Faster development and deployment cycles

- Standardized architecture and integration patterns

- Enhanced model scalability and reliability

- Simplified maintenance and monitoring

How to Build Scalable AI Architectures

Building scalable AI architectures requires strategic planning, modular design, and automation. A scalable system can adapt to growing data volumes, increased user demand, and new business requirements. Here’s a step-by-step approach:

1. Define Enterprise Goals and AI Use Cases

Understanding business objectives is the foundation of a scalable architecture. Identify the AI use cases, desired outcomes, and performance metrics. For instance, in retail, the goal might be personalized recommendations to increase engagement, while in healthcare, predictive diagnostics could be the priority.

2. Adopt Modular Architecture

A modular AI architecture separates components like data ingestion, model training, inference, and reporting. This allows independent scaling and maintenance, reducing the risk of system-wide failures.

3. Build Robust Data Pipelines

Reliable data pipelines ensure clean, consistent, and compliant data flows. Incorporate streaming, batch processing, and workflow orchestration tools to handle enterprise-scale data efficiently.

4. Leverage Cloud Infrastructure

Cloud platforms provide flexibility, scalability, and cost efficiency. They enable multi-cloud AI integration, easy deployment, and automated scaling of resources to handle dynamic workloads.

5. Implement MLOps Practices

MLOps automates the entire model lifecycle, including AI model training, deployment, monitoring, and retraining. This automation ensures that models remain accurate, efficient, and high-performing without requiring excessive manual intervention.

6. Ensure Compatibility with Legacy Systems

Use API gateways and incremental integration strategies to connect AI systems with existing enterprise infrastructure. Synchronize data across platforms to maintain consistency and prevent disruption.

7. Prioritize Security and Compliance

Implement encryption, access controls, and auditing. Adhere to regulations such as GDPR to protect sensitive enterprise data.

Also Read : The Role of AI Integration Consulting in Driving Scalable Digital Transformation

Best Practices for AI System Integration

Integrating AI successfully requires more than technology; it involves adopting best practices that ensure scalability, reliability, and efficiency.

1. Standardize Interfaces

Using standardized interfaces and protocols simplifies communication between AI components and external systems. This modular approach reduces integration complexity and ensures easier maintenance and future scalability.

2. Implement End-to-End Automation

Automating workflows through AI workflow automation reduces human errors, accelerates decision-making, and ensures consistency across processes, enabling more efficient operations and improved system reliability.

3. Adopt Scalable Architecture Patterns

Implement architecture patterns like pipelines, microservices, and event-driven designs. These patterns allow systems to scale horizontally and vertically, accommodating increasing workloads and dynamic business demands.

4. Monitor and Optimize AI Performance

Continuously track model performance, data quality, and infrastructure health. Regular monitoring ensures accurate, reliable results and helps quickly identify issues requiring optimization or retraining.

5. Prioritize Documentation and Knowledge Sharing

Maintain clear documentation and standardized procedures to support team collaboration, accelerate onboarding, and preserve institutional knowledge, ensuring long-term maintainability and scalable AI integration.

Also Read : How to Integrate AI into Your Existing Systems and Stay Competitive

Cloud-Based AI Integration Patterns

Cloud integration is a cornerstone of scalable AI systems. Using cloud-native patterns provides flexibility, reliability, and operational efficiency.

1. Multi-Cloud Architecture

A multi-cloud AI architecture distributes workloads across multiple cloud providers, reducing dependency on a single vendor and enhancing fault tolerance.

2. Microservices Deployment

Deploy AI functionalities as microservices in the cloud. Each service can be scaled independently based on workload requirements.

3. Real-Time Event Streaming

Cloud-based AI systems often rely on real-time event streams to process data instantly. Tools like Kafka, Kinesis, and cloud event hubs enable high-throughput, low-latency pipelines.

4. Containerization and Orchestration

Containers (Docker) and orchestration tools (Kubernetes) ensure reproducible deployments, easier scaling, and simplified model management.

5. Model Serving and APIs

Cloud platforms provide scalable endpoints for serving AI models. RESTful APIs or gRPC endpoints allow business applications to access AI capabilities efficiently.

Also Read : Integrated AI Solutions: How to Leverage AI Across Multiple Business Functions

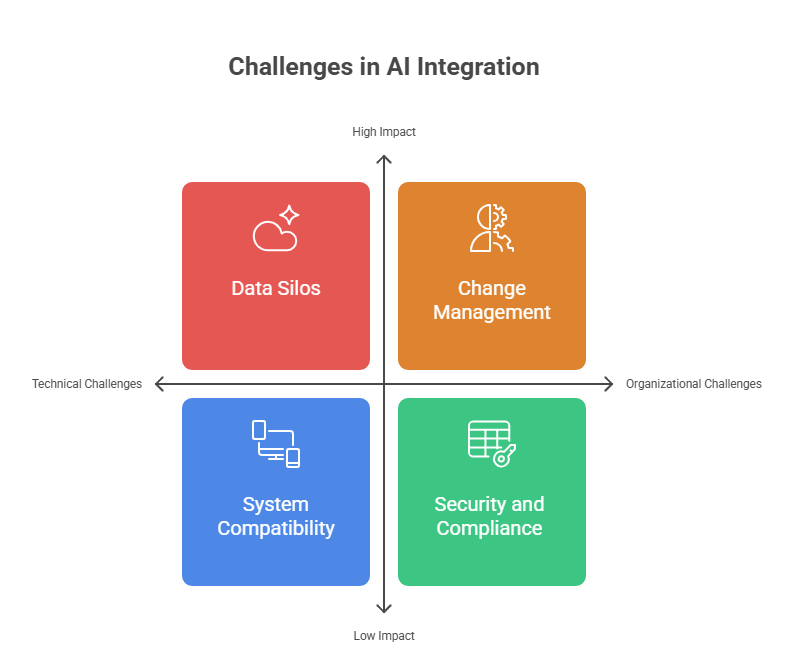

Challenges in Integrating AI with Existing Infrastructure

Even with the best architecture and frameworks, enterprises face challenges when integrating AI into legacy systems.

1. Data Silos

Legacy systems often store data in fragmented silos, making it difficult to aggregate and use for AI training and inference. AI integration services help unify and streamline data pipelines.

2. System Compatibility

Older systems may not support modern APIs, containerized deployments, or real-time streaming, requiring careful integration strategies.

3. Resource Constraints

Limited computational resources and storage in legacy infrastructure may hinder AI model deployment and scaling.

4. Change Management

AI adoption often involves cultural and operational changes. Employees need training, and processes must adapt to new AI-driven workflows.

5. Security and Compliance

Legacy systems may lack modern security measures. Integrating AI requires ensuring secure data flows and compliance with regulatory requirements.

Real-World Applications

AI is transforming industries across the globe by improving efficiency, decision-making, and customer experience. In fact, according to recent research by McKinsey, 73% of organizations are accelerating their digital transformation initiatives to integrate AI and automation into their operations. Here’s how enterprises are leveraging AI across different sectors:

Healthcare

AI-driven diagnostics, predictive analytics, and patient monitoring help detect diseases early, provide personalized treatments, and support proactive healthcare decision-making for better patient outcomes and operational efficiency.

Finance

Fraud detection, credit risk modeling, and automated investment recommendations improve financial security, reduce errors, support compliance, and enable faster, more accurate decision-making for banks and financial institutions.

Retail

Personalized recommendations, demand forecasting, and dynamic pricing enhance customer engagement, optimize inventory management, increase sales, and help retailers respond quickly to market trends and consumer behavior.

Manufacturing

Predictive maintenance, quality control, and process optimization reduce downtime, maintain consistent product quality, improve operational efficiency, and ensure smooth production workflows in industrial environments.

Telecommunications

Network optimization, anomaly detection, and AI-powered customer support enhance service reliability, reduce downtime, improve user experience, and enable telecom providers to respond proactively to technical issues.

Conclusion

A well-structured AI Integration Architecture serves as the backbone of successful enterprise AI initiatives. It ensures that data pipelines, machine learning models, and business systems operate as one unified ecosystem, scalable, secure, and adaptable to evolving needs. By combining the right frameworks, modular design, and automation practices, organizations can unlock greater efficiency, reduce integration complexity, and accelerate innovation. Building a resilient architecture today lays the foundation for future-ready AI systems that deliver measurable business value and long-term growth.

Why Choose Amplework

Amplework empowers enterprises to design, implement, and optimize their AI Integration Architecture with precision and scalability. Our team combines deep technical expertise in MLOps, data engineering, and cloud orchestration to create architectures that perform consistently under growing workloads. We go beyond connecting AI models to business systems by delivering digital transformation solutions that unify technology, data, and strategy. Every component is designed to communicate efficiently, perform reliably, and align with your long-term business goals.

What sets Amplework apart is our commitment to driving sustainable success. From architecture planning and deployment to maintenance and performance optimization, we provide end-to-end support that keeps your AI ecosystem resilient and future-ready. With proven experience across industries, we help organizations accelerate innovation, strengthen data governance, and achieve measurable ROI from every AI and digital initiative. Partner with Amplework to transform your AI roadmap into a scalable, intelligent, and sustainable reality.

FAQs

What is the difference between AI system integration and AI architecture design?

AI system integration focuses on connecting AI components to business systems, while AI architecture design defines the overall structure, scalability, and flow of the AI ecosystem.

How can legacy systems be integrated with modern AI architectures?

Through API gateways, incremental integration strategies, and data synchronization mechanisms that ensure minimal disruption and maintain data consistency.

Why is MLOps important for scalable AI architectures?

MLOps automates model lifecycle management, ensuring accuracy, performance, and continuous improvements without excessive manual intervention.

What are the common challenges in cloud-based AI integration?

Challenges include latency in real-time processing, multi-cloud complexity, data security, and cost management.

How can enterprises ensure AI scalability best practices?

By adopting modular architectures, standardized interfaces, automated workflows, cloud deployment, and continuous monitoring.

Which AI frameworks are best for enterprise integration?

Frameworks like TensorFlow Extended (TFX), Kubeflow, MLflow, Apache Airflow, and BentoML provide end-to-end solutions for AI pipeline management, model deployment, and workflow orchestration.

How do modular architectures improve AI system scalability?

Modular architectures separate AI components into independent units, allowing individual scaling, easier maintenance, and reduced risk of system-wide failures.

What is the role of cloud-based AI integration patterns?

Cloud-based patterns, such as microservices, multi-cloud deployments, event-driven workflows, and containerized models, enable dynamic scaling, high availability, and cost efficiency.

How can AI pipelines handle large-scale enterprise data?

By using real-time streaming, batch processing, workflow orchestration tools, and robust data validation to ensure quality, consistency, and compliance across the pipeline.

What are the key considerations for security and compliance in AI systems?

Enterprises must implement encryption, access controls, audit logging, and ensure adherence to regulations like GDPR or HIPAA to protect sensitive data and maintain system integrity.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228