When You Need an ML Infrastructure Expert for Your AI Project

Many AI projects fail not because of poor models or bad data, but due to infrastructure problems. Companies invest heavily in data scientists and ML engineers, yet their models never reach production or perform poorly at scale. The missing piece is often ML infrastructure expertise and timely ML ops hiring that ensures systems run smoothly.

ML infrastructure specialists bridge the gap between model development and production deployment. They build the pipelines, systems, and processes that make AI work reliably at scale, similar to what an AI infra specialist ensures for enterprise systems.

Understanding ML Infrastructure

ML infrastructure supports AI model development, training, deployment, and monitoring. This includes pipelines, environments, serving systems, monitoring tools, and automation frameworks. These systems handle challenges like model versioning, data drift, and continuous retraining, where a strong ML pipeline setup becomes essential.

Key infrastructure components:

- Automated data pipelines

- Training orchestration systems

- Model deployment frameworks

- Performance monitoring tools

- Version control for models and data

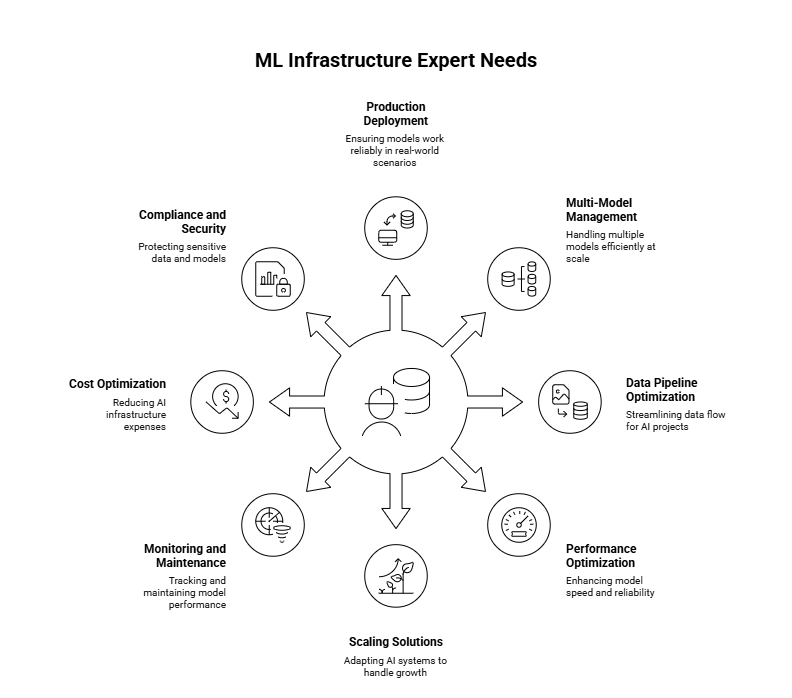

When You Need an ML Infrastructure Expert

1. Moving Models from Development to Production

Models built in notebooks often fail in production. Experts create deployment pipelines, ensuring containerization, APIs, load balancing, and monitoring work reliably. This is a key reason companies prioritize ML ops hiring during scaling.

Production deployment needs:

- Containerization and orchestration setup

- API serving infrastructure

- Load balancing and scaling

- Rollback mechanisms configured

- Production monitoring systems

2. Managing Multiple Models at Scale

Running multiple models simultaneously is complex. Infrastructure experts implement MLOps practices for automated deployments, centralized registries, monitoring dashboards, version control, and AI model optimization to reduce operational overhead.

Multi-model management includes:

- Centralized model registry

- Automated deployment workflows

- Unified monitoring dashboards

- Version control systems

- Resource optimization strategies

3. Data Pipeline Bottlenecks

Data delays or failures affect AI projects. Specialists build automated pipelines ensuring clean and real-time data flows, guided by a stable ML pipeline setup that reduces bottlenecks.

Pipeline optimization addresses:

- Automated data validation

- Real-time processing capabilities

- Error handling and recovery

- Data quality monitoring

- Scalable storage solutions

4. Performance and Latency Issues

Slow AI models reduce user experience. Infrastructure experts optimize model serving, caching, and load distribution, ensuring fast, reliable inference without compromising accuracy or system performance.

Performance optimization includes:

- Model serving optimization

- Inference acceleration techniques

- Caching strategy implementation

- Load distribution systems

- Resource allocation tuning

5. Scaling Challenges

AI systems may work with small datasets but crash under production loads. ML Experts design scalable architectures, distributed training, auto-scaling, and optimized storage to handle growth efficiently.

Scaling solutions involve:

- Distributed training setup

- Cloud resource optimization

- Auto-scaling configurations

- Batch processing systems

- Storage architecture design

6. Model Monitoring and Maintenance

Without monitoring, AI models degrade unnoticed. Specialists track accuracy, latency, data drift, and resource usage while triggering automatic retraining to maintain consistent performance over time.

Monitoring systems track:

- Model accuracy metrics

- Data drift detection

- Prediction latency

- Resource utilization

- Error rates and patterns

7. Cost Optimization

AI infrastructure costs can escalate. Experts optimize resource allocation, batch processing, storage tiers, and cloud utilization while implementing automated cleanup to reduce expenses without affecting performance.

Cost optimization strategies:

- Right-sized resource allocation

- Spot instance utilization

- Efficient batch processing

- Storage tier optimization

- Automated resource cleanup

8. Compliance and Security Requirements

Handling sensitive data requires strict regulatory compliance. Specialists design secure ML systems with encryption, access controls, audit logging, and continuous compliance monitoring to protect data and models.

Security implementation includes:

- Data encryption standards

- Access control policies

- Audit logging systems

- Compliance monitoring tools

- Secure model serving

Factors Showing You’re Ready for ML Infrastructure Investment

Before scaling your AI initiatives, it’s important to recognize the key indicators that signal readiness for ML infrastructure investment.

- Growing Model Complexity: You’re moving beyond simple models to complex architectures requiring sophisticated infrastructure.

- Production Deployment: The Development phase is complete, and you need reliable production systems.

- Team Scaling: Your data science team is growing and needs standardized processes and tools.

- Multiple Stakeholders: Various teams need access to ML capabilities requiring centralized infrastructure.

- Cost Concerns: Current infrastructure costs are unsustainable or unpredictable.

Also Read : Hiring Dedicated ML Developers: Benefits, Cost & When to Choose

Conclusion

ML infrastructure expertise is essential when moving AI from experimentation to production. The right systems ensure reliability, scalability, and prevent costly failures.

At Amplework Software, we build production-ready ML infrastructure that scales with your business. Our AI/ML services handle deployment, monitoring, and optimization, letting your team focus on model development and results.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228