How to Train an AI Assistant on Sensitive Internal Data Without Exposing It

Introduction

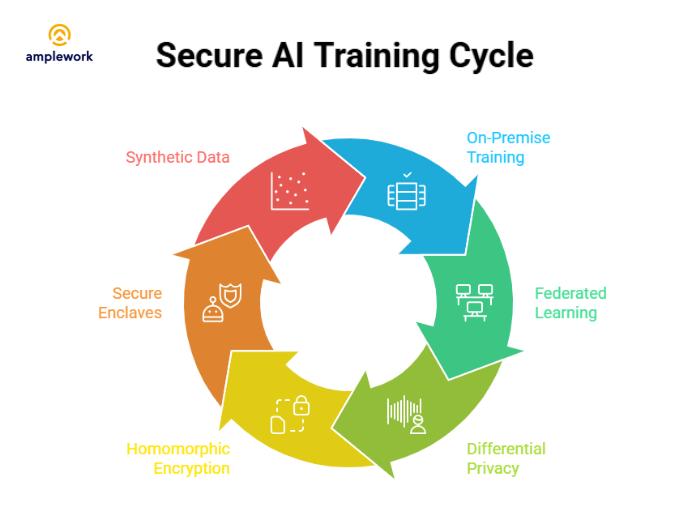

Organizations often avoid training AI assistants on sensitive internal data due to exposure risks. Privacy-preserving AI techniques, like federated learning and secure enclaves, allow safe, high-performing AI training while maintaining confidentiality.

In this blog, we will discuss how to train an AI assistant on sensitive data using techniques like federated learning, secure enclaves, and synthetic data generation, along with practical implementation steps and best practices.

Understanding the Data Exposure Problem

Traditional AI training sends sensitive data to external servers, creating risk points like transit exposure, shared storage, vendor breaches, and compliance issues. For enterprises, these risks highlight the need to train an AI assistant on sensitive data securely.

Key exposure risks include:

- Data transmission to external servers

- Storage on shared cloud infrastructure

- Vendor employee access possibilities

- Potential data breach vulnerabilities

- Regulatory compliance violations

Secure Methods to Train an AI Assistant on Sensitive Data

1. On-Premise Training Infrastructure

Deploy AI model training entirely within your secure data centers to retain full control over sensitive information. Ideal for healthcare, finance, and government data.

Advantages:

- Complete data sovereignty

- Zero external transmission

- Full security protocol control

- Direct compliance management

- Custom infrastructure optimization

2. Federated Learning Implementation

Train AI models across multiple locations without centralizing data. Models learn patterns while raw data stays secure, reducing breach risks. Suitable for multi-office or regional organizations.

Benefits:

- Data remains in secure environments

- Multi-location training support

- Minimized centralized risk

- Compliance with data residency

- Individual privacy preservation

3. Differential Privacy Techniques

Add controlled noise to training data so individual records cannot be identified. Ensures privacy while preserving overall model patterns. Essential for the financial and healthcare sectors.

Benefits:

- Mathematical privacy guarantees

- Protects individual records

- Maintains pattern learning

- Regulatory compliance support

- Quantifiable privacy levels

4. Homomorphic Encryption

Train AI models on encrypted data, allowing computations without decrypting information. Data stays fully protected, suitable for highly sensitive datasets.

Advantages:

- Full data encryption is maintained

- No plaintext exposure

- Mathematical security guarantees

- Cloud-based training possible

- Maximum data protection

5. Secure Enclaves and Confidential Computing

Use hardware-based secure environments to process data in encrypted memory. Prevents any external access, even by cloud providers. Ideal for cloud adoption without compromising sensitive information.

Features:

- Hardware-level security

- Encrypted memory processing

- Zero administrator access

- Scalable cloud deployment

- Verification and attestation

6. Synthetic Data Generation

Generate artificial datasets mimicking real patterns without using actual sensitive data. Enables safe AI development, testing, and collaboration while maintaining privacy.

Benefits:

- Eliminates privacy risks

- Unlimited data creation

- No consent needed

- Facilitates secure sharing

- Safe development environment

Practical Implementation Steps

- Assess Data Sensitivity: Classify your data by sensitivity level and regulatory requirements. Different data types may require different protection approaches.

- Select Appropriate Techniques: Match security methods to your specific needs, considering data type, regulatory requirements, budget, and performance needs.

- Start with Proof of Concept: Test chosen approaches with representative data in a PoC before full implementation. Validate security effectiveness and performance acceptability.

- Implement Monitoring: Deploy continuous monitoring to detect unauthorized access attempts, unusual patterns, or security breaches.

- Train Your Team: Ensure staff understand security protocols, proper data handling procedures, and incident response processes.

The Amplework Secure AI Training Advantage

At Amplework Software, we specialize in privacy-preserving Intelligent solutions that enable organizations to train AI assistants on sensitive data safely. Our expertise spans federated learning, differential privacy, secure enclaves, and encrypted training implementations.

Our Secure AI Training Services:

- Privacy-preserving architecture design

- On-premise and hybrid deployment options

- Federated learning implementation

- Differential privacy integration

- Confidential computing solutions

We’ve helped healthcare providers, financial institutions, and enterprises across regulated industries implement AI development services and secure machine learning without compromising on AI capabilities. Our approach balances maximum security with practical performance and usability requirements.

Also Read : How to Keep Your Data Private and Secure When Training a Custom AI Model

Conclusion

It is possible to build powerful AI assistants without compromising sensitive data. By applying privacy-preserving techniques, organizations can train an AI assistant on sensitive data securely. Selecting the right methods and following structured implementation ensures compliance, confidentiality, and high-quality AI results.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228