How to Keep Your Data Private and Secure When Training a Custom AI Model

Overview

Training custom AI models requires massive amounts of data, often containing sensitive business information, customer details, or proprietary insights. Many companies hesitate to pursue AI initiatives because they fear breaches, compliance violations, or IP theft during the training process. This is where secure AI model training ensures AI data privacy.

Data security doesn’t have to be a barrier to AI adoption. With the right security measures and privacy-preserving techniques, you can train powerful custom AI models while keeping your sensitive data protected.

Understanding AI Training Security Risks

Custom model development exposes sensitive data to multiple vulnerabilities. Cloud-based platforms may store information on shared infrastructure, while third-party AI services can retain copies of the data. Internal teams may also have access to datasets, increasing insider risk. These risks highlight the importance of secure AI model training.

Key concerns include unauthorized access, model output leakage, third-party retention, insecure transmission, and weak access controls that threaten the protection of sensitive data in AI.

Essential Security Measures for AI Training

1. On-Premise Infrastructure Deployment

Train AI models within your own secure infrastructure rather than public cloud platforms. This approach gives you complete control over data storage, access, and processing. On-premise deployment eliminates third-party risks and satisfies strict compliance requirements for highly sensitive information.

On-premise advantages:

- Full data sovereignty maintained

- No external access points

- Custom security protocols enabled

- Direct compliance control

- Zero vendor data retention

2. End-to-End Encryption

Encrypt data at every stage: storage, transmission, and processing. This protects training workflows and strengthens AI data privacy while ensuring secure AI model training remains uncompromised.

Encryption coverage includes:

- Data at rest protection

- Secure transmission protocols

- Encrypted model storage

- Protected backup systems

- Secure key management

3. Data Anonymization Techniques

Remove personally identifiable information and sensitive details before training begins. Anonymization protects individual privacy while preserving data patterns necessary for effective AI learning. This approach reduces risk significantly if data is somehow compromised during training.

Anonymization methods:

- Remove direct identifiers completely

- Aggregate into broader categories

- Apply differential privacy techniques

- Use pseudonymization for reversibility

- Implement k-anonymity standards

4. Federated Learning Implementation

Train AI models across distributed datasets without centralizing sensitive information. Data stays in original secure locations while the model learns from patterns across multiple sources. This decentralized approach minimizes exposure and satisfies data residency requirements.

Federated learning benefits:

- Data never leaves secure environments

- Reduced centralized breach risks

- Multi-location training capability

- Maintained regional compliance

- Lower attack surface area

5. Access Control and Authentication

Implement strict role-based access controls, limiting who can view or use training data. Multi-factor authentication, audit logging, and need-to-know principles minimize insider threats. Granular permissions ensure only authorized personnel have access to specific data subsets.

Access control measures:

- Multi-factor authentication required

- Role-based permission systems

- Complete audit trail logging

- Time-limited access grants

- Automated access reviews

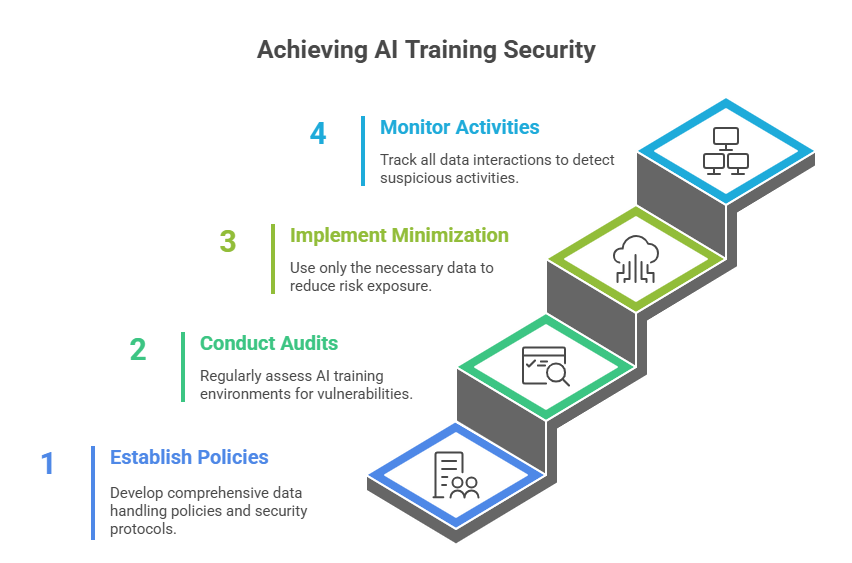

Building a Secure AI Training Environment

Establish Data Governance Policies

Create comprehensive policies defining data handling throughout AI training. Document security protocols, access procedures, and incident response plans. Clear governance frameworks guide teams and demonstrate security commitment.

Conduct Security Audits Regularly

Perform regular assessments of AI training environments, identifying vulnerabilities before exploitation. Third-party security audits provide objective evaluation and compliance verification. Regular testing ensures ongoing protection.

Implement Data Minimization

Use only the minimum data necessary for effective model training. Reducing data volume decreases risk exposure and simplifies compliance. Data minimization aligns with privacy regulations while maintaining AI effectiveness.

Monitor and Log All Activities

Track every interaction with training data through comprehensive logging systems. Real-time monitoring detects suspicious activities immediately. Detailed audit trails support incident investigation and compliance reporting.

Also Read : Step-by-Step Process to Build a Basic AI Model

Conclusion

Secure AI model training is achievable with the right practices. Strong protection and high-performance AI can coexist through privacy-by-design, encryption, and controlled deployment. Organizations can adopt AI confidently without risking sensitive data or compliance.

Amplework Software ensures safe, compliant, and production-ready AI systems through advanced architectures and expert AI consulting services.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228