How to Build an AI Platform: Complete Architecture, Tech Stack & Development Blueprint

Introduction

Building an AI platform is fundamentally different from developing standalone AI models. A platform must support multiple use cases, handle diverse workloads, scale efficiently, and provide infrastructure for teams to build, deploy, and manage AI solutions. Many companies underestimate this complexity and struggle with fragmented tools and disconnected systems.

Successful AI platform development requires careful architecture planning, appropriate technology selection, and robust development processes. This blueprint guides you through strategies that transform AI experiments into production-ready platforms.

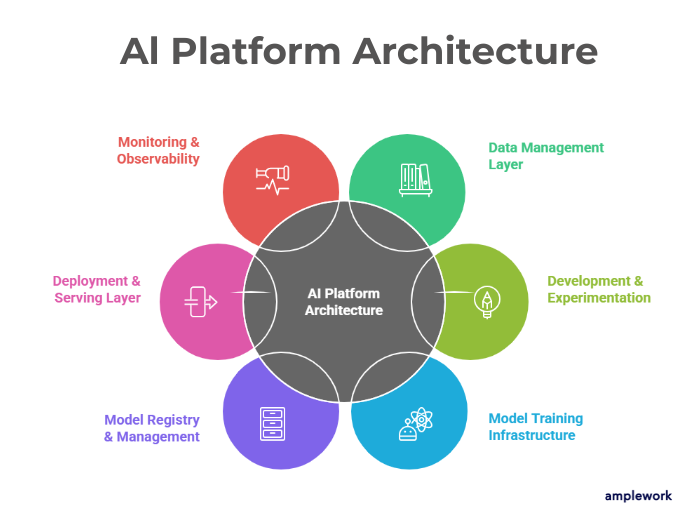

Essential Components of AI Platform Architecture

1. Data Management Layer

Handles ingestion, storage, processing, and governance to ensure clean, accessible, high-quality data.

Key components:

- Data ingestion pipelines

- Feature stores

- Data versioning & lineage

- Quality validation & monitoring

- Metadata management

2. Development & Experimentation Environment

Provides flexible, collaborative environments for model development and reproducible experimentation.

Includes:

- Jupyter notebooks & IDEs

- Experiment tracking systems

- Version control integration

- Collaborative workspaces

- Reusable code libraries

3. Model Training Infrastructure

Supports distributed training, hyperparameter tuning, and compute resource management for efficient AI model development.

Features:

- GPU/TPU orchestration

- Distributed training frameworks

- Automated hyperparameter tuning

- Training pipeline automation

- Cost optimization

4. Model Registry & Management

Centralizes model versions, metadata, metrics, and deployment workflows for governance and lifecycle management.

Capabilities:

- Version control & lineage tracking

- Performance metrics storage

- Model comparison tools

- Deployment approval workflows

5. Deployment & Serving Layer

Manages production deployment, API handling, scaling, and reliable model serving.

Components:

- Model serving frameworks

- API gateway management

- Auto-scaling infrastructure

- A/B testing & canary deployments

6. Monitoring & Observability

Ensures production models maintain performance, detect anomalies, and prevent failures.

Monitoring includes:

- Model performance metrics

- Data drift detection

- Prediction latency tracking

- Resource utilization

- Error rate & anomaly alerts

Also Read : Custom AI Model Training for Companies: When Off-the-Shelf Models Aren’t Enough

AI Platform Tools and Technology Stack

| Architecture Layer | Tools & Technologies |

| Data Infrastructure | S3, Azure Blob, GCS, PostgreSQL, MongoDB, Spark, Dask, Airflow, Prefect, Feast, Tecton |

| Model Development | TensorFlow, PyTorch, scikit-learn, MLflow, Weights & Biases, JupyterLab, Colab |

| Training Infrastructure | Kubernetes, Kubeflow, Horovod, Ray, AWS SageMaker, Azure ML, Vertex AI |

| Model Deployment | TensorFlow Serving, TorchServe, Seldon Core, Kong, AWS API Gateway, Docker, Kubernetes |

| Monitoring & Operations | Prometheus, Grafana, ELK Stack, Splunk, Evidently AI, Fiddler |

AI Platform Development Process

1. Requirements and Planning

Define scope, user personas, use cases, success metrics, budget, timeline, and team capabilities. Clear requirements guide architecture and prevent scope creep.

2. Architecture Design

Design scalable architecture, select technologies, plan integrations, and ensure security, compliance, and governance are incorporated from the start.

3. MVP Development

Build a minimum viable platform with core workflows, data pipelines, training infrastructure, and basic deployment. Test real use cases and gather feedback.

4. Integration and Testing

Integrate components, implement APIs, and leverage AI integration services. Conduct load, security, and failure testing to ensure system reliability.

5. Documentation and Training

Prepare comprehensive documentation, tutorials, and best practices. Train teams to use the platform effectively, accelerating adoption and reducing support needs.

6. Production Deployment and Improvement

Deploy gradually, monitor performance, gather feedback, optimize components, add new features, and stay updated with technologies and best practices for continuous improvement.

Common AI Platform Development Challenges

- Complexity Management: AI platforms involve many moving parts. Start simple and add complexity only when needed. Avoid over-engineering the early stages.

- Technology Selection: Numerous tools exist for each component. Choose mature, well-supported technologies with strong communities. Avoid bleeding-edge tools for critical infrastructure.

- Scalability Planning: Design for growth, but don’t over-optimize prematurely. Build scalable foundations while keeping initial implementation manageable.

- User Adoption: The best platform fails without user adoption. Involve users early, gather feedback frequently, and prioritize usability alongside functionality.

Also Read : Step-by-Step Process to Build a Basic AI Model (Without Building a Full AI Platform)

The Amplework AI Platform Development Advantage

At Amplework Software, we provide end-to-end AI Development Services, building custom platforms from architecture design to production deployment. When you hire ML engineers, you gain expertise in scalable infrastructure, MLOps pipelines, integration, and AI PoC validation, ensuring platforms meet real organizational needs efficiently and effectively.

Final Words

Building an AI platform transforms how organizations develop and deploy AI solutions. A production-ready platform combines data engineering, ML, DevOps, and architecture expertise, accelerating innovation, reducing technical debt, and ensuring usability. Organizations can get started by scheduling a platform architecture consultation to plan and implement a robust AI infrastructure.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228