Moving From AI PoC to Production: Checklist, Roadmap & Best Practices for Scaling

Introduction

Successfully transitioning from AI PoC to production is where most AI initiatives fail. Your proof of concept works perfectly in controlled environments with clean data and patient stakeholders. But production demands reliability, scalability, and performance that PoCs rarely address.

The gap between “it works on my laptop” and “it runs reliably for 10,000 users” contains countless technical, operational, and organizational challenges. This guide provides a practical roadmap for poc to production AI transitions that actually succeed.

Understanding the PoC to Production Gap

Your PoC validates feasibility with sample data, minimal users, and simplified workflows. Production systems must handle real-world data quality issues, concurrent user loads, integration with existing infrastructure, security and compliance requirements, continuous monitoring and maintenance, and performance expectations measured in milliseconds.

This isn’t just about making your PoC bigger; it’s about rebuilding it with production-grade architecture, reliability, and operational discipline.

AI Deployment Checklist: Critical Requirements

Infrastructure and Architecture

Before scaling your AI PoC to production, verify these foundational elements:

- Scalable Computing Resources: Can your infrastructure handle 10x current load? Production systems need auto-scaling capabilities, load balancing across multiple instances, failover mechanisms for high availability, and resource monitoring tools.

- Production-Grade Data Pipeline: Your PoC likely processed static datasets. Production requires real-time data ingestion, automated data validation and quality checks, data versioning and lineage tracking, and backup procedures.

- Model Serving Infrastructure: Efficient deployment through containerization using Docker or Kubernetes, API endpoints with authentication and rate limiting, model versioning and rollback capabilities, and A/B testing infrastructure for gradual rollouts.

Security and Compliance

Production AI scaling demands rigorous security that PoCs often skip. Encrypt data at rest and in transit, implement strict access controls and authentication, maintain comprehensive audit logs, and ensure compliance with relevant regulations, such as GDPR and HIPAA, or industry-specific standards.

Protect model intellectual property, prevent adversarial attacks, secure API endpoints against unauthorized access, and validate all inputs to prevent injection attacks.

Performance and Monitoring

Define clear service level objectives for latency, throughput, availability, and accuracy. Implement comprehensive monitoring, including model performance tracking for accuracy drift, infrastructure monitoring for system health, user experience monitoring for end-to-end performance, and automated alerting for anomalies and failures.

Also Read : Live PoC and Pilot Strategy in AI: How This Approach Speeds Up Validation & Deployment

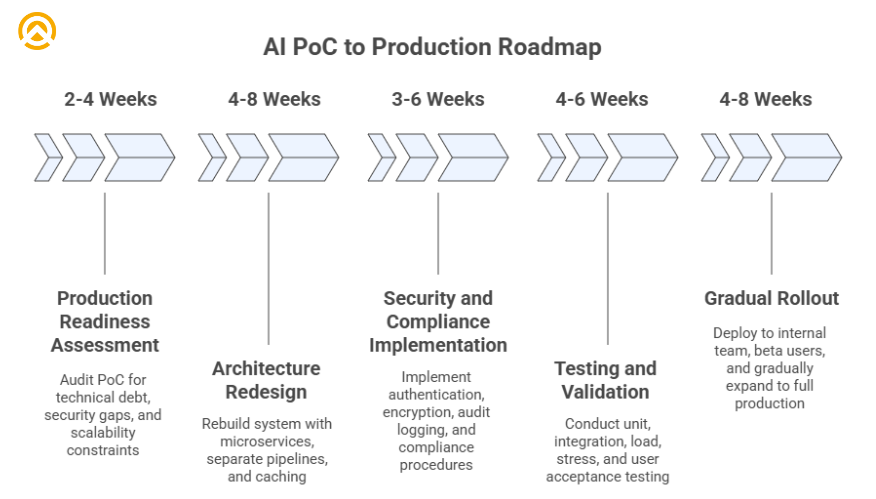

AI PoC to Production Roadmap

Phase 1: Production Readiness Assessment (2-4 Weeks)

Audit your PoC against production requirements. Identify technical debt, architectural limitations, security gaps, and scalability constraints. Conduct code quality reviews, infrastructure capacity planning, security gap analysis, and integration requirements mapping.

Phase 2: Architecture Redesign (4-8 Weeks)

Rebuild your system with production architecture principles. Most PoCs require significant restructuring for poc to production AI transitions. Implement microservices design for independent scaling, separate AI model training and inference pipelines, caching strategies for frequent predictions, and queue-based processing for load spikes.

Phase 3: Security and Compliance Implementation (3-6 Weeks)

Address security and regulatory requirements that PoCs typically defer. Implement authentication and authorization, encrypt sensitive communications, establish audit logging, document compliance procedures, and conduct security testing.

Phase 4: Testing and Validation (4-6 Weeks)

Rigorous testing separates successful launches from disasters. Conduct unit tests, integration tests, load testing to verify scalability, stress testing to identify breaking points, and user acceptance testing with real workflows.

Phase 5: Gradual Rollout (4-8 Weeks)

Never launch directly to full production. Start with internal team deployment, move to limited beta with 5-10% of users, gradually expand while monitoring performance, conduct A/B testing against existing solutions, and reach full production after validation.

Best Practices for Successful AI Scaling

- Maintain Model Performance: Production environments differ from PoC conditions. Implement continuous monitoring to detect model drift, establish retraining schedules, automate model evaluation pipelines, and maintain performance baselines.

- Plan for Operational Overhead: AI scaling isn’t a one-time project; it’s ongoing. Budget 20-30% of development effort for model retraining, performance monitoring, bug fixes, security updates, and user support.

- Build Cross-Functional Teams: Successful transitions require collaboration across data scientists, ML engineers, DevOps teams, security teams, and product managers. Siloed teams create integration failures.

Common Pitfalls to Avoid

Underestimating timeline and resources, poc to production AI transitions typically require 3-6 months, not weeks. Skipping gradual rollouts amplifies failure impact. Neglecting monitoring means you can’t manage what you don’t measure. Ignoring data quality in production where data arrives messy and unpredictable.

Also Read : Prototype vs AI Proof of Concept: Differences, Use Cases & How to Choose the Right Approach

Scale Your AI Successfully

Transitioning from AI PoC to production requires technical expertise, operational discipline, and cross-functional coordination that many organizations lack internally. At Amplework Software, our AI integration services ensure seamless connection with your existing infrastructure and support you through every phase of the AI scaling journey with proven frameworks.

sales@amplework.com

sales@amplework.com

(+91) 9636-962-228

(+91) 9636-962-228